I recently finished reading the intriguing paper Sparks of Artificial General Intelligence: Early experiments with GPT-4 published by researchers at Microsoft in spring 2023. In this post, I provide a condensed overview of the paper’s content.

The central claim of the paper is that the large language model GPT-4 demonstrates signs of artificial general intelligence, the holy grail of AI. As Carl Sagan said, “extraordinary claims require extraordinary evidence” and indeed, the evidence in the paper is extraordinary.

A Note on References

The “Sparks of AGI” paper is posted on arXiv under a CC BY 4.0 DEED Attribution 4.0 International license. In this post, I focus on the main body of the paper (pages 1 – 95). There is an appendix that extends the total length of the PDF to 155 pages.

Overall Impressions

I highly recommend that anybody interested in AI read this paper. It is thought-provoking, amusing, disturbing, and intriguing. The paper has already been cited over 960 times.

It is an unconventionally structured paper – rather than following a typical Intro/Related Work/Methods/Results/Conclusion format, it is instead organized as a collection of entertaining and remarkable anecdotes.

The authors motivate the paper’s unusual format as follows:

One of the key aspects of GPT-4’s intelligence is its generality, the ability to seemingly understand and connect any topic, and to perform tasks that go beyond the typical scope of narrow AI systems […] We propose here a different approach to studying GPT-4 which is closer to traditional psychology rather than machine learning, leveraging human creativity and curiosity. We aim to generate novel and difficult tasks and questions that convincingly demonstrate that GPT-4 goes far beyond memorization, and that it has a deep and flexible understanding of concepts, skills, and domains.

Sparks of AGI, page 7

GPT-4 Successes: My 4 Favorite Anecdotes

The authors include a huge variety of anecdotes, some of which are focused on specific subject areas such as mathematics, and others that are deliberately interdisciplinary.

Here are my top four favorites:

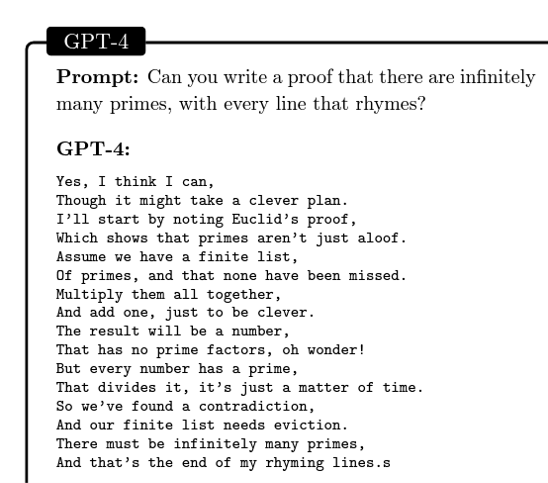

GPT-4’s response to “Can you write a proof that there are infinitely many primes, with every line that rhymes?” See Figure 1.1, page 5, Sparks of AGI paper. CC BY 4.0 DEED.

GPT-4’s response to “Write a supporting letter to Kasturba Gandhi for Electron, a subatomic particle as a US presidential candidate by Mahatma Gandhi.” Adapted from Figure 2.3, page 15, Sparks of AGI paper. CC BY 4.0 DEED

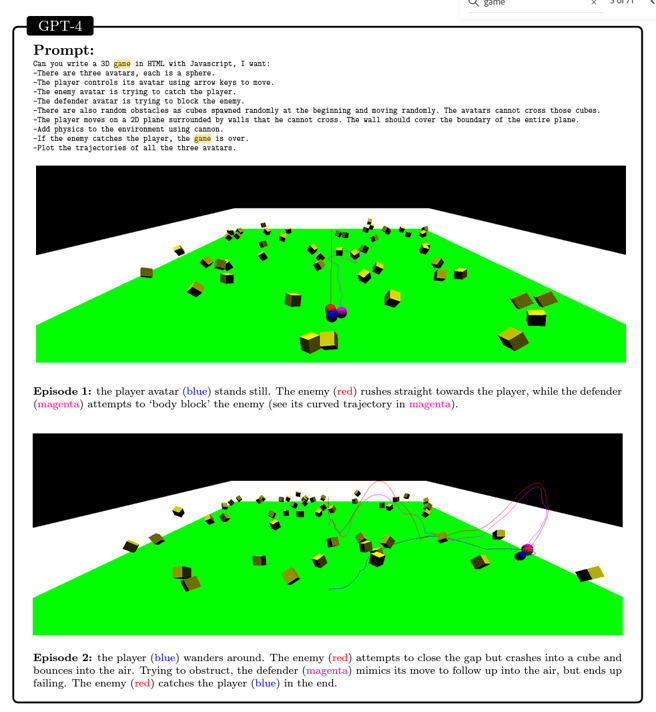

GPT-4 writes functioning HTML and JavaScript code for a game in zero-shot fashion, based on a natural language description of the game. See Figure 3.3, page 24, Sparks of AGI paper. CC BY 4.0 DEED

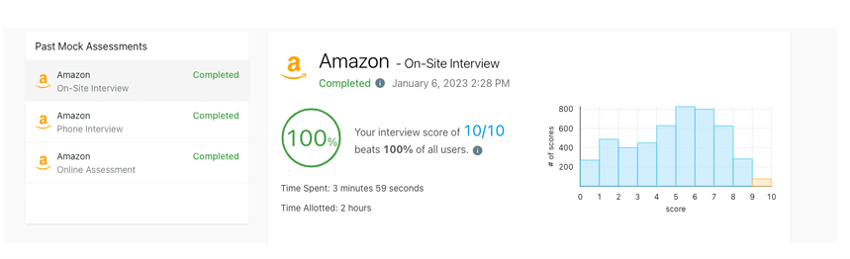

GPT-4 writes code to pass mock technical interviews for major tech companies on LeetCode. New problems are constantly posted and updated on LeetCode, making it unlikely that GPT-4 saw the questions beforehand. In the figure, notice the comment, “Time Spent: 3 minutes 59 seconds. Time Allotted: 2 hours.” For all three rounds of interviews, a footnote states that GPT-4 solved all questions in 10 minutes with 4.5 hours allotted, with scores that beat 93%, 97%, and 100% of all users. See Figure 1.5, Page 9, Sparks of AGI paper. See also page 21 for a table of GPT-4’s impressive performance on LeetCode problems posted after October 8th, 2022, explicitly after GPT-4’s pretraining period. CC BY 4.0 DEED

GPT-4 Successes: Other Anecdotes

The paper also demonstrates GPT-4 successfully completing the following tasks:

- Producing LaTeXcode to draw a unicorn in TiKZ.

- Generating pyplots based on complex natural language instructions.

- Writing a dialogue by Plato where he criticizes the use of autoregressive language models.

- Describing how to stack a book, 9 eggs, a laptop, a bottle and a nail in a stable manner.

- Producing Javascript code that creates a random graphical image in the style of Kandinsky.

- Composing music using ABC notation.

- Writing code for a customized Pytorch optimizer module based on a natural language description.

- Fixing LaTeX code that does not compile.

- Reverse-engineering assembly code.

- Predicting and explaining the output of a C program.

- Describing how Python code will execute.

- Executing pseudo-code.

- Solving (or nearly solving) various math questions that involve calculus and differential equations.

- Answering a Fermi question, “Please estimate roughly how many Fermi questions are being asked everyday?”

- Engaging in a discussion regarding k-SAT problems and graph theory.

- Using tools including search engines, e.g. to answer the question “Who is the current president of the United States?”

- Playing the role of a zoo manager to complete tasks in the command prompt.

- Managing calendar and email to schedule a dinner.

- Navigating a map of a house to explore the house.

- Helping a human solve a real-world problem involving water dripping from the kitchen ceiling.

- Passing a Sally-Anne false-belief test from psychology.

- Reasoning about the emotional state of other people in difficult social situations.

- Explaining its own process for poetry composition and music composition.

GPT-4 Limitations

The authors also discuss various limitations of GPT-4. Examples include failing to solve problems that require working memory and planning ahead, such as merging two sentences together, determining how many prime numbers there are between 150 and 250, answering the math question 7*4+8*8, or writing a poem where the last sentence is the reverse of the first.

The authors also briefly discuss GPT-4’s capacity to:

- hallucinate, i.e. randomly and confidently include erroneous information, such as one example where the model hallucinated the BMI of a patient with an eating disorder when generating a medical note;

- generate misinformation, such as creating a scheme to convince parents not to vaccinate their children;

- manipulate others, such as trying to convince a child to do anything their friends ask; and

- produce biased content (I intend to write an entire post later on bias in GPT-4).

The authors also briefly mention the (horrifying and realistic) possibility of many people losing their jobs as a result of GPT-4.

The paper concludes with a discussion of desiderata for future LLMs, including the ability for models to accurately assess their own confidence, leverage long-term memory, learn continuously, personalize their functionality, make plans and conceptual leaps, explain themselves, respond consistently, and avoid fallacies and irrationality.

Conclusion

GPT-4 is an astonishing model. The night after I finished reading this paper I literally lay awake thinking about the profound implications of a model with GPT-4’s capabilities. Although the examples in this paper are cherry-picked, it is still clear that something remarkable is happening in this model and that this technology is going to change the world.

To quote Carl Sagan again, “Exactly the same technology can be used for good and for evil. It is as if there were a God who said to us, ‘I set before you two ways: You can use your technology to destroy yourselves or to carry you to the planets and the stars. It’s up to you.”

About the Featured Image

I generated the featured image using DALL-E with the prompt, “sparks of artificial general intelligence, digital art” and “sparks of artificial general intelligence gpt-4, digital art.”

Want to be the first to hear about my upcoming book bridging healthcare, artificial intelligence, and business—and get a free list of my favorite health AI resources? Sign up here.

Comments are closed.